I am working with a number of Life Science manufacturing companies that have taken a strategic approach for their manufacturing systems landscape. There is a lot of buzz on this topic in the industry, which makes it that much more interesting but with some challenges. I am generally fond of using a historical perspective and so I decided to do the same for the MES software products in the life science industry. This perspective is just mine and I am sure there are many more that can be given by my peers in the industry – a subtle hint.

So let’s start in the 1980’s, the decade that gave us CIM and a growing awareness about the role that computers play in manufacturing operations. The focus at that time was how computer systems, aka software, can be used to increase efficiencies and manage complexity. In fact computer technology was gaining so much momentum that it was considered a major element in revolutionizing the manufacturing landscape in parallel to the advent of the Lean movement.

This gave birth to quite a few Manufacturing Execution System (MES) product companies in the 1980’s. The 90’s then followed by a massive development and spread of information technology, which is now at the core of everything we do today, not only in manufacturing. Manufacturing operations are becoming so dependent on information that these systems have to be considered at a strategic level. Initially this strategic focus was given to expensive business systems such as ERP however it is becoming evident that other systems, specifically MES, sometime have more impact on the bottom line and should be considered equally strategic.

MES software products evolved in different industries and their roots manifest themselves in both the functionality and the corresponding

MES vendor’s organization. Companies and products that currently serve the Life Science industry generally have their roots in the semi-conductor and electronics industry, and understandably also the Life Science industry itself.

In the industries outside Pharma and Bio-Pharma, MES was introduced to deal with the inherent high level of automation and complexity of the high volume manufacturing process where lowering cost and increasing production throughput were crucial. It was virtually impossible to manually manage the wealth and complexity of information and MES provided a solution. The

MES products were centered on a discrete workflow model that allowed rich modeling capabilities while at the same time allowing customizations. In fact early

MESs were merely toolboxes with a workflow engine, rich data modeling capabilities and tools to custom build user interfaces and business logic.

In the Life Science industry the main driver for introducing

MES was compliance or the electronic batch record and therefore the first such systems provided a “paper-on-glass” solution. The idea was to simply digitize the paper batch records, kind of like the old “overhead projectors”. These systems had simple modeling capabilities and did not allow for much customization. In many cases these “paper-on-glass” systems were supplemented with business logic built as customizations in the automation system. They were commonly implemented in pharmaceutical plants, where the focus on compliance meant low tolerance for customizations and a minimum of change after system were commissioned. This resulted in

MES functionality that was split between the heavily customized automation applications and a “canned” paper-on-glass system to deal with batch records. The Weigh and Dispense feature of these systems was used mostly for traditional pre-weigh activities where the materials are weighed and staged before the process.

In the 2000’s a consolidation started in which some of the independent

MES from vendors where acquired by the major automation vendors and positioned into the life sciences industry. This introduced the rich modeling capabilities that grew out of the semi-conductor and electronics industry to the

Life Sciences industry accustomed to “paper-on-glass” systems. This leaves us today with a wide choice of

MES that are rapidly gaining maturity and sophistication in the form of advanced functionality and interoperability. I think that this maturity is an important factor and plays nicely into the strategic nature of most Manufacturing Systems initiatives that I have been involved in. There is still a long road ahead but I have not been so optimistic about the Manufacturing System domain, in a long time. It certainly looks like there are some very interesting and also challenging years ahead as we work to execute on these strategic initiatives.

Analytics header

Friday, June 29, 2012

Friday, May 18, 2012

How hard is it to define MI requirements?

Well let's say its not straightforward! For quite some time I have been trying to explain the difficulties in providing clear specification for Manufacturing Intelligence (MI) system. In addition the life science industry is still bound by traditional methods of analysis, requirements specs and functional specs that simply put will not work for MI. MI's whole purpose is to provide information to somebody to support his creative behavior as he explore root-causes and solutions in the dynamic world of manufacturing operations. (This is an extract from a forthcoming article in Pharmaceutical Engineering).

The right approach is based on the critical element of understanding how people use information to solve problems and gauge performance. There is a clear need to provide effective and relevant information necessary to support the information consumed by the different roles in the manufacturing operations. Identifying what needs to be measured is a fundamental principle but it is not sufficient, the information also has to be arranged in a usable manner. Therefore it is important to study and understand information consumption patterns by roles. Take for example the information consumption pattern for a supervisor in a biotech plant that is creatively analyzing a production event.

The right approach is based on the critical element of understanding how people use information to solve problems and gauge performance. There is a clear need to provide effective and relevant information necessary to support the information consumed by the different roles in the manufacturing operations. Identifying what needs to be measured is a fundamental principle but it is not sufficient, the information also has to be arranged in a usable manner. Therefore it is important to study and understand information consumption patterns by roles. Take for example the information consumption pattern for a supervisor in a biotech plant that is creatively analyzing a production event.

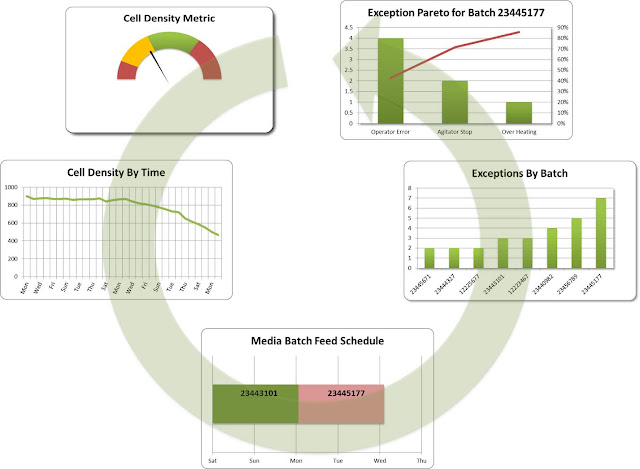

The production supervisor glances at his dashboard and observes that the Cell Density is not within acceptable limits. He immediately navigates to view the “Cell Density by Time” trend over the last 2 weeks and observes a negative trend beginning around “Mon.” that indicates something is seriously not in order.

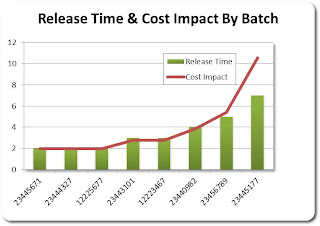

To begin the analysis, he examines the “Media Batch Feed Schedule” to see if there is any correlation between the trend and the Media that is being fed to the bio-reactor. This action is obviously based on intuition possibly because he has seen that before. Seeing that there is a correlation between the change to a new Media batch feed when the trend start he decides to take a look at the “Exception By Batch” information and notices that this specific batch had an unusual number of exceptions. He then dives deeper into the data by analyzing an exception Pareto for the suspect batch. He finds a high number of operator errors, which clearly highlights the root cause of the trend. Finally since he is accountable for operational profits he decides to take a look at the cost impact of this event in order to understand what the impact is to the plants financial performance (see graphic below). Unfortunately the cost impact is substantial and thus he as to take action to mitigate this increased cost.

The scenario shows the power of “Actionable Intelligence”.

The supervisor has all the information he needs in order to quickly and

effectively analyze the situation to determine root-cause and he can take

action based on the results. The path that the supervisor decides to take

in the example above is one of several that could have been used to detect and

diagnose the Cell Density performance issue. It is this type of self-guided or

self-serve analysis that really shows how information is consumed to meet a

specific goal and should be the common pattern for the information required by

a specific person or role. These requirements have direct bearing on the

information context and data structures that must be provided, and the

dimensions by which the metric is analyzed or “sliced and diced”. Although this

seems trivial at first the requirements that this analysis patterns has on the

underlying information and data structures is significant and is a critical

component of the system design. It is not enough just to collect the data; it

has to be arranged in a manner that enables this unique type of analytic

information consumption.

Subscribe to:

Posts (Atom)